1. Introduction

Kaitai Struct is a domain-specific language (DSL) that is designed with one particular task in mind: dealing with arbitrary binary formats.

Parsing binary formats is hard, and there’s a reason for that: such formats were designed to be machine-readable, not human-readable. Even when one’s working with a clean, well-documented format, there are multiple pitfalls that await the developer: endianness issues, in-memory structure alignment, variable size structures, conditional fields, repetitions, fields that depend on other fields previously read, etc, etc, to name a few.

Kaitai Struct tries to isolate the developer from all these details and allow them to focus on the things that matter: the data structure itself, not particular ways to read or write it.

2. Installation and invocation

Kaitai Struct has a somewhat diverse infrastructure around it. This chapter will give an overview of the options available.

2.1. Web IDE

If you’re going to try Kaitai Struct for the first time, the Web IDE is probably the easiest way to get started. Just open Kaitai Struct Web IDE and you’re ready to go:

A list of Web IDE features is available on the kaitai_struct_webide GitHub wiki.

Note that there are two different versions of the Web IDE:

-

https://ide.kaitai.io/ — stable version, has the stable Kaitai Struct compiler (currently 0.11, released 2025-09-07)

-

https://ide.kaitai.io/devel/ — unstable development version, has the latest compiler (the most recent 0.12-SNAPSHOT)

If you want to use the latest features, use the devel Web IDE.

|

Note

|

The devel Web IDE follows the default

master branch

of the kaitai_struct_webide

repository — it is automatically updated when the

master branch

is updated.

|

2.2. Desktop / console version

If you don’t fancy using a hex dump in a browser, or want to integrate Kaitai Struct into your project build process automation, you’d want a desktop / console solution. Of course, Kaitai Struct offers that as well.

2.2.1. Installation

Please refer to the official website for installation instructions. After installation, you will have:

-

ksc(orkaitai-struct-compiler) — command-line Kaitai Struct compiler, a program that translates.ksyinto parsing libraries in a chosen target language. -

ksv(orkaitai-struct-visualizer, optional) — console visualizer

|

Note

|

ksc or ksv shorthand might be not available if your system doesn’t

support symbolic links — just use the full name in that case.

|

If you’re going to invoke ksc frequently, you’d probably want to add

it to your executable searching PATH, so you don’t have to type the full

path to it every time. You’d get that automatically on .deb package

and Windows .msi install (provided you don’t disable that option) -

but it might take some extra manual setup if you use a generic .zip

package.

2.2.2. Invocation

Invoking ksc is easy:

ksc [options] <file>...Common options:

-

<file>…— source files (.ksy) -

-t <language> | --target <language>— target languages (cpp_stl,csharp,java,javascript,perl,php,python,ruby,all)-

allis a special case: it compiles all possible target languages, creating language-specific directories (as per language identifiers) inside output directory, and then creating output module(s) for each language starting from there

-

-

-d <directory> | --outdir <directory>— output directory (filenames will be auto-generated)

Language-specific options:

-

--dotnet-namespace <namespace>— .NET namespace (C# only, default: Kaitai) -

--java-package <package>— Java package (Java only, default: root package) -

--php-namespace <namespace>— PHP namespace (PHP only, default: root package)

Misc options:

-

--verbose— verbose output -

--help— display usage information and exit -

--version— display version information and exit

3. Workflow overview

The main idea of Kaitai Struct is that you create a description of a binary data

structure format using a formal language, save it as a .ksy file, and

then compile it with the Kaitai Struct compiler into a target programming language.

TODO

4. Kaitai Struct language

With the workflow issues out of the way, let’s concentrate on the Kaitai Struct language itself.

4.1. Fixed-size structures

Probably the simplest thing Kaitai Struct can do is reading fixed-size structures. You might know them as C struct definitions — consider something like this fictional database entry that keeps track of dog show participants:

struct {

char uuid[16]; /* 128-bit UUID */

char name[24]; /* Name of the animal */

uint16_t birth_year; /* Year of birth, used to calculate the age */

double weight; /* Current weight in kg */

int32_t rating; /* Rating, can be negative */

} animal_record;And here is how it would look in .ksy:

meta:

id: animal_record

endian: be

seq:

- id: uuid

size: 16

- id: name

type: str

size: 24

encoding: UTF-8

- id: birth_year

type: u2

- id: weight

type: f8

- id: rating

type: s4It’s a YAML-based format,

plain and simple. Every .ksy file is a type description. Everything

starts with a meta section: this is where we specify top-level info on

the whole structure we describe. There are two important things here:

-

idspecifies the name of the structure -

endianspecifies default endianness:-

befor big-endian (AKA "network byte order", AKA Motorola, etc) -

lefor little-endian (AKA Intel, AKA VAX, etc)

-

With that out of the way, we use a seq element with an array (ordered

sequence of elements) in it to describe which attributes this structure

consists of. Every attribute includes several keys, namely:

-

idis used to give the attribute a name -

typedesignates the attribute type:-

no type means that we’re dealing with just a raw byte array;

sizeis to be used to designate number of bytes in this array -

s1,s2,s4,u1,u2,u4, etc for integers-

"s" means signed, "u" means unsigned

-

number is the number of bytes

-

if you need to specify non-default endianness, you can force it by appending

beorle— i.e.s4be,u8le, etc

-

-

f4andf8for IEEE 754 floating point numbers;4and8, again, designate the number of bytes (single or double precision)-

if you need to specify non-default endianness, you can force it by appending

beorle— i.e.f4be,f8le, etc

-

-

stris used for strings; that is almost the same as "no type", but a string has a concept of encoding, which must be specified usingencoding

-

The YAML-based syntax might look a little more verbose than C-like structs, but there are a few good reasons to use it. It is consistent, it is easily extendable, and it’s easy to parse, so it’s easy to make your own programs/scripts that work with .ksy specs.

4.2. Docstrings

A very simple example is that we can add docstrings to every attribute, using syntax like that:

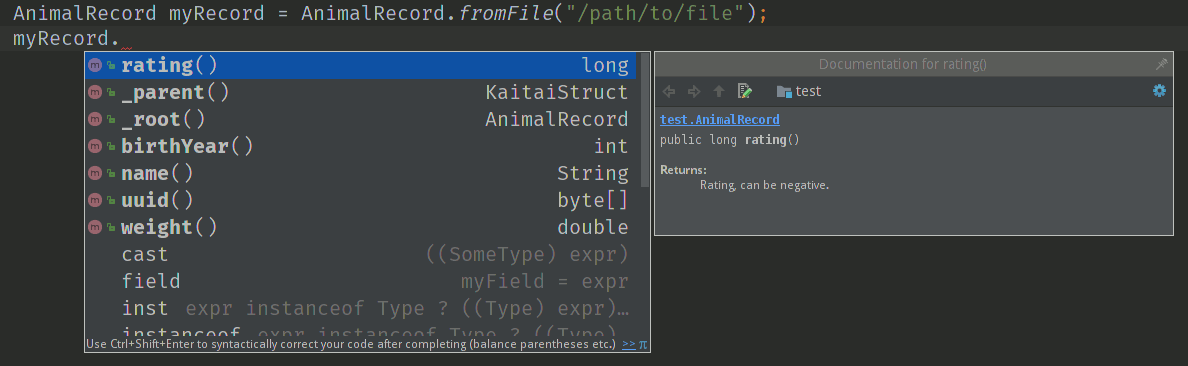

- id: rating

type: s4

doc: Rating, can be negativeThese docstrings are not just the comments in the .ksy file, they’ll actually get exported into the target language as well (for example, in Java they’ll become JavaDoc, in Ruby they’ll become RDoc/YARD, etc). This, in turn, is super helpful when editing the code in various IDEs that will generate reminder popups for intelligent completion, when you browse through class attributes:

doc|

Note

|

You can use YAML folded style strings for longer documentation that spans multiple lines: |

4.3. Checking for "magic" signatures

Many file formats use some sort of safeguard measure against using a completely different file type in place of the required file type. The simple way to do so is to include some "magic" bytes (AKA "file signature"): for example, checking that the first bytes of the file are equal to their intended values provides at least some degree of protection against such blunders.

To specify "magic" bytes (i.e. fixed content) in structures, Kaitai Struct includes

a special contents key. For example, this is the beginning of a seq

for Java .class files:

seq:

- id: magic

contents: [0xca, 0xfe, 0xba, 0xbe]This reads the first 4 bytes and compares them to the 4 bytes CA FE BA BE. If

there is any mismatch (or less than 4 bytes are read),

it throws an exception and stops parsing at an early stage, before any

damage (pointless allocation of huge structures, waste of CPU cycles)

is done.

Note that contents is very flexible and you can specify:

-

A UTF-8 string — bytes from such a string would be checked against

-

An array with:

-

bytes in decimal representation

-

bytes in hexadecimal representation, starting with 0x

-

UTF-8 strings

-

In the case of using an array, all elements' byte representations would be concatenated and expected in sequence. Some examples:

- id: magic1

contents: JFIF

# expects bytes: 4A 46 49 46

- id: magic2

# we can use YAML block-style arrays as well

contents:

- 0xca

- 0xfe

- 0xba

- 0xbe

# expects bytes: CA FE BA BE

- id: magic3

contents: [CAFE, 0, BABE]

# expects bytes: 43 41 46 45 00 42 41 42 45More extreme examples to illustrate the idea (i.e. possible, but definitely not recommended in real-life specs):

- id: magic4

contents: [foo, 0, A, 0xa, 42]

# expects bytes: 66 6F 6F 00 41 0A 2A

- id: magic5

contents: [1, 0x55, '▒,3', 3]

# expects bytes: 01 55 E2 96 92 2C 33 03|

Note

|

There’s no need to specify type or size for fixed contents

data — it all comes naturally from the contents.

|

4.4. Validating attribute values

|

Important

|

Feature available since v0.9. |

To ensure attributes in your data structures adhere to expected formats

and ranges, Kaitai Struct provides a mechanism for validating attribute

values using the valid key. This key allows you to define constraints

for values, enhancing the robustness of your specifications. Here’s how

you can enforce these constraints:

-

eq(or directlyvalid: value): ensures the attribute value exactly matches the given value. -

min: specifies the minimum valid value for the attribute. -

max: specifies the maximum valid value for the attribute. -

any-of: defines a list of acceptable values, one of which the attribute must match. -

expr: an expression that evaluates to true for the attribute to be considered valid.

For most cases, the direct valid: value shortcut is preferred for its simplicity, effectively functioning as valid/eq.

seq:

# Full form of equality constraint: the only valid value is 0x42

- id: exact_value1

type: u1

valid:

eq: 0x42

# Shortcut for the above: the only valid value is 0x42

- id: exact_value2

type: u1

valid: 0x42

# Value must be at least 100 and at most 200

- id: bounded_value

type: u2

valid:

min: 100

max: 200

# Value must be one of 3, 5, or 7

- id: enum_constraint_value

type: u4

valid:

any-of: [3, 5, 7]

# Value must be even

- id: expr_constraint_value

type: u4

valid:

expr: _ % 2 == 0When a value does not meet the specified criteria, Kaitai Struct raises a validation error, halting further parsing. This preemptive measure ensures the data being parsed is within the expected domain, providing a first layer of error handling.

|

Note

|

The actual implementation of validation checks is language-dependent and may vary in behavior and supported features across different target languages. |

4.5. Variable-length structures

Many protocols and file formats tend to conserve bytes, especially for strings. Sure, it’s stupid to have a fixed 512-byte buffer for a string that typically is 3-5 bytes long and only rarely can be up to 512 bytes.

One of the most common methods used to mitigate this problem is to use some integer to designate length of the string, and store only designated number of bytes in the stream. Unfortunately, this yields a variable-length structure, and it’s impossible to describe such a thing using C-style structs. However, it’s not a problem for Kaitai Struct:

seq:

- id: my_len

type: u4

- id: my_str

type: str

size: my_len

encoding: UTF-8Note the size field: we use not a constant, but a reference to a field

that we’ve just parsed from a stream. Actually, you can do much more

than that — you can use a full-blown expression language in size

field. For example, what if we’re dealing with UTF-16 strings and

my_len value designates not a number of bytes, but number of byte

pairs?

seq:

- id: my_len

type: u4

- id: my_str

type: str

size: my_len * 2

encoding: UTF-16LEOne can just multiply my_len by 2 — and voila — here’s our UTF-16

string. Expression language is very powerful, we’ll be talking more

about it later.

Last, but not least, we can specify a size that spans automatically to

the end of the stream. For that one, we’ll use a slightly different

syntax:

seq:

- id: some_int

type: u4

- id: string_spanning_to_the_end_of_file

type: str

encoding: UTF-8

size-eos: true4.6. Delimited structures

|

Note

|

All features specified in this section are demonstrated on strings, but the same features should work on any user types as well. |

Another popular way to avoid allocating huge fixed-size buffers is to use some sort of trailing delimiter. The most well-known example of this is probably the null-terminated string which became a standard string representation in C:

61 62 63 00

These 4 bytes actually represent the 3-character string "abc", plus one extra trailing byte "0" (AKA null) which serves as a delimiter or terminator. By agreement, C strings cannot include a zero byte: every time a function in C sees that either in stream or in memory, it considers that as a special mark to stop processing.

In Kaitai Struct, you can define all sorts of delimited structures. For example, this is how you define a null-terminated string:

seq:

- id: my_string

type: str

terminator: 0

encoding: UTF-8As this is a very common thing, there’s a shortcut for type: str and

terminator: 0. One can write this as:

seq:

- id: my_string

type: strz

encoding: UTF-8Of course, you can use any other byte (for example, 0xa, AKA

newline) as a terminator. This gives Kaitai Struct some limited

capabilities to parse certain text formats as well.

Reading "until the terminator byte is encountered" could be dangerous. What if we never encounter that byte?

Another very widespread model is actually having both a fixed-sized buffer for a string and a terminator. This is typically an artifact of serializing structures like this from C. For example, take this structure:

struct {

char name[16]; /* Name of the animal */

uint16_t birth_year; /* Year of birth, used to calculate the age */

} animal_record;and do the following in C:

struct animal_record rec;

strcpy(rec.name, "Princess");

// then, after some time, the same record is reused

strcpy(rec.name, "Sam");After the first strcpy operation, the buffer will look like:

50 72 69 6e|63 65 73 73|00 ?? ?? ??|?? ?? ?? ??| |Princess.???????|

And after the second strcpy, the following will remain in the

memory:

53 61 6d 00|63 65 73 73|00 ?? ?? ??|?? ?? ?? ??| |Sam.cess.???????|

Effectively, the buffer is still 16 bytes, but the only meaningful

contents it has is up to first null terminator. Everything beyond that

is garbage left over from either the buffer not being initialized at all

(these ?? bytes could contain anything), or it will contain parts of

strings previously occupying this buffer.

It’s easy to model that kind of behavior in Kaitai Struct as well,

just by combining size and terminator:

seq:

- id: name

type: str

size: 16

terminator: 0

encoding: UTF-8This works in 2 steps:

-

sizealways that exactly 16 bytes would be read from the stream. -

terminator, given thatsizeis present, only works inside these 16 bytes, cutting string short early with the first terminator byte encountered, saving application from getting all that trailing garbage.

4.7. Enums (named integer constants)

The nature of binary format encoding dictates that in many cases we’ll be using some kind of integer constants to encode certain entities. For example, an IP packet uses a 1-byte integer to encode the protocol type for the payload: 6 would mean "TCP" (which gives us TCP/IP), 17 would mean "UDP" (which yields UDP/IP), and 1 means "ICMP".

It is possible to live with just raw integers, but most programming languages actually provide a way to program using meaningful string names instead. This approach is usually dubbed "enums" and it’s totally possible to generate an enum in Kaitai Struct:

seq:

- id: protocol

type: u1

enum: ip_protocol

enums:

ip_protocol:

1: icmp

6: tcp

17: udpThere are two things that should be done to declare a enum:

-

We add an

enumskey on the type level (i.e. on the same level asseqandmeta). Inside that key, we add a map, keys of it being enum names (in this example, there’s only one enum declared,ip_protocol) and values being yet another map, which maps integer values into identifiers. -

We add an

enum: …parameter to every attribute that’s going to be represented by that enum, instead of just being a raw integer. Note that such attributes must have some sort of integer type in the first place (i.e.type: u*ortype: s*).

4.8. Substructures (subtypes)

What do we do if we need to use many of the strings in such a format?

Writing so many repetitive my_len- / my_str-style pairs would be so

bothersome and error-prone. Fear not, we can define another type,

defining it in the same file, and use it as a custom type in a stream:

seq:

- id: track_title

type: str_with_len

- id: album_title

type: str_with_len

- id: artist_name

type: str_with_len

types:

str_with_len:

seq:

- id: len

type: u4

- id: value

type: str

encoding: UTF-8

size: lenHere we define another type named str_with_len, which we reference

just by doing type: str_with_len. The type itself is defined using the

types: key at the top level. That’s a map, and inside it we can define as

many subtypes as we want. We define just one, and inside it we nest

the exact same syntax as we use for the type description on the top

level — i.e. the same seq designation.

|

Note

|

There’s no need for meta/id here, as the type name is derived from the

types key name here.

|

Of course, one can actually have more levels of subtypes:

TODO

4.9. Accessing attributes in other types

Expression language (used, for example, in a size key) allows you to

refer not only to attributes in the current type, but also in other types.

Consider this example:

seq:

- id: header

type: main_header

- id: body

size: header.body_len

types:

main_header:

seq:

- id: magic

contents: MY-SUPER-FORMAT

- id: body_len

type: u4If the body_len attribute was in the same type as body, we could just

use size: body_len. However, in this case we’ve decided to split the

main header into a separate subtype, so we’ll have to access it using the .

operator — i.e. size: header.body_len.

One can chain attributes with . to dig deeper into type

hierarchy — e.g. size: header.subheader_1.subsubheader_1_2.field_4.

But sometimes we need just the opposite: how do we access upper-level

elements from lower-level types? Kaitai Struct provides two options here:

4.9.1. _parent

One can use the special pseudo-attribute _parent to access the parent

structure:

TODO4.9.2. _root

In some cases, it would be way too impractical to write tons of

_parent._parent._parent._parent... or just plain impossible (if you’re

describing a type which might be used on several different levels, thus

different number of _parent would be needed). In this case, we can use a

special pseudo-attribute _root to just start navigating from the very

top-level type:

TODO

seq:

- id: header

type: main_header

types:

main_header:

seq:

- id: magic

contents: MY-SUPER-FORMAT

- id: body_len

type: u4

- id: subbody_len

type: u44.10. Conditionals

Some protocols and file formats have optional fields, which only exist

in some conditions. For example, one can have some byte first that

designates if some other field exists (1) or not (0). In Kaitai Struct, you can do that

using the if key:

seq:

- id: has_crc32

type: u1

- id: crc32

type: u4

if: has_crc32 != 0In this example, we again use expression language to specify a boolean

expression in the if key. If that expression is true, the field is parsed and

we’ll get a result. If that expression is false, the field will be skipped

and we’ll get a null (or its closest equivalent in our target

programming language) if we try to get it.

At this point, you might wonder how that plays together with enums. After you mark some integer as "enum", it’s no longer just an integer, so you can’t compare it directly with the number. Instead you’re expected to compare it to other enum values:

seq:

- id: my_animal

type: u1

enum: animal

- id: dog_tag

type: u4

# Comparing to enum literal

if: my_animal == animal::dog

enums:

animal:

1: cat

2: dogThere are other enum operations available, which we’ll cover in the expression language guide later.

4.11. Repetitions

Most real-life file formats do not contain only one copy of some element, but might contain several copies, i.e. they repeat the same pattern over and over. Repetition might be:

-

element repeated up to the very end of the stream

-

element repeated a pre-defined number of times

-

element repeated while some condition is not satisfied (or until some condition becomes true)

Kaitai Struct supports all these types of repetitions. In all cases, it will create a resizable array (or nearest equivalent available in the target language) and populate it with elements.

4.11.1. Repeat until end of stream

This is the simplest kind of repetition, done by specifying

repeat: eos. For example:

seq:

- id: numbers

type: u4

repeat: eosThis yields an array of unsigned integers, each 4 bytes long, which spans till the end of the stream. Note that if we’ve got a number of bytes left in the stream that’s not divisible by 4 (for example, 7), we’ll end up reading as much as possible, and then the parsing procedure will throw an end-of-stream exception.

Of course, you can do this with any type, including user-defined types (subtypes):

seq:

- id: filenames

type: filename

repeat: eos

types:

filename:

seq:

- id: name

type: str

size: 8

encoding: ASCII

- id: ext

type: str

size: 3

encoding: ASCIIThis one defines an array of records of type filename. Each individual

filename consists of 8-byte name and 3-byte ext strings in ASCII

encoding.

4.11.2. Repeat for a number of times

One can repeat an element a certain number of times. For that, we’ll need an expression that will give us the number of iterations (which would be exactly the number of items in resulting array). It could be a simple constant to read exactly 12 numbers:

seq:

- id: numbers

type: u4

repeat: expr

repeat-expr: 12Or we might reference some attribute here to have an array with the length specified inside the format:

seq:

- id: num_floats

type: u4

- id: floats

type: f8

repeat: expr

repeat-expr: num_floatsOr, using expression language, we can even do some more complex math on it:

seq:

- id: width

type: u4

- id: height

type: u4

- id: matrix

type: f8

repeat: expr

repeat-expr: width * heightThis one specifies the width and height of the matrix first, then parses

as many matrix elements as needed to fill a width × height matrix

(although note that it won’t be a true 2D matrix: it would still be just

a regular 1D array, and you’ll need to convert (x, y) coordinates to

indices in that 1D array manually).

4.11.3. Repeat until condition is met

Some formats don’t specify the number of elements in array, but instead

just use some sort of special element as a terminator that signifies end

of data. Kaitai Struct can do that as well using repeat-until syntax, for

example:

seq:

- id: numbers

type: s4

repeat: until

repeat-until: _ == -1This one reads 4-byte signed integer numbers until encountering -1. On

encountering -1, the loop will stop and further sequence elements (if

any) will be processed. Note that -1 would still be added to array.

Underscore (_) is used as a special variable name that refers to the

element that we’ve just parsed. When parsing an array of user types, it

is possible to write a repeat-until expression that would reference some

attribute inside that user type:

seq:

- id: records

type: buffer_with_len

repeat: until

repeat-until: _.len == 0

types:

buffer_with_len:

seq:

- id: len

type: u1

- id: value

size: len4.12. Typical TLV implementation (switching types on an expression)

"TLV" stands for "type-length-value", and it’s a very common staple in

many formats. The basic idea is that we use a modular and

reverse-compatible format. On the top level, it’s very simple: we know

that the whole format is just an array of records (repeat: eos or

repeat: expr). Each record starts the same: there is some marker that

specifies the type of the record and an integer that specifies the record’s

length. After that, the record’s body follows, and the body format

depends on the type marker. One can easily specify that basic record

outline in Kaitai Struct like that:

seq:

- id: rec_type

type: u1

- id: len

type: u4

- id: body

size: lenHowever, how do we specify the format for body that depends on

rec_type? One of the approaches is using conditionals, as we’ve seen

before:

seq:

- id: rec_type

type: u1

- id: len

type: u4

- id: body_1

type: rec_type_1

size: len

if: rec_type == 1

- id: body_2

type: rec_type_2

size: len

if: rec_type == 2

# ...

- id: body_unidentified

size: len

if: rec_type != 1 and rec_type != 2 # and ...However, it’s easy to see why it’s not a very good solution:

-

We end up writing lots of repetitive lines

-

We create lots of

body_*attributes in a type, while in reality only onebodywould exist — everything else would fail theifcomparison and thus would be null -

If we want to catch up the "else" branch, i.e. match everything not matched with our

ifs, we have to write an inverse of sum ofifs manually. For anything more than 1 or 2 types it quickly becomes a mess.

That is why Kaitai Struct offers an alternative solution. We can use a switch type operation:

seq:

- id: rec_type

type: u1

- id: len

type: u4

- id: body

size: len

type:

switch-on: rec_type

cases:

1: rec_type_1

2: rec_type_2This is much more concise and easier to maintain, isn’t it? And note

that size is specified on the attribute level, thus it applies to all

possible type values, setting us a good hard limit. What’s even better —

even if you’re missing the match, as long as you have size specified,

you would still parse body of a given size, but instead of

interpreting it with some user type, it will be treated as having no

type, thus yielding a raw byte array. This is super useful, as it

allows you to work on TLV-like formats step-by-step, starting by

supporting only 1 or 2 types of records, and gradually adding more and

more types.

|

Caution

|

One needs to make sure that the type used in Here, |

You can use "_" for the default (else) case which will match every other value which was not listed explicitly.

type:

switch-on: rec_type

cases:

1: rec_type_1

2: rec_type_2

_: rec_type_unknownSwitching types can be a very useful technique. For more advanced usage examples, see Advanced switching.

4.13. Instances: data beyond the sequence

So far we’ve done all the data specifications in seq — thus they’ll

get parsed immediately from the beginning of the stream, one-by-one, in

strict sequence. But what if the data you want is located at some other

position in the file, or comes not in sequence?

"Instances" are Kaitai Struct’s answer for that. They’re specified

in a key instances on the same level as seq. Consider this example:

meta:

id: big_file

endian: le

instances:

some_integer:

pos: 0x400000

type: u4

a_string:

pos: 0x500fff

type: str

size: 0x11

encoding: ASCIIInside instances we need to create a map: keys in that map are

attribute names, and values specify attribute in the very same manner as

we would have done it in seq, but there is one important additional

feature: using pos: … one can specify a position to start parsing

that attribute from (in bytes from the beginning of the stream). Just as

in size, one may use expression language and reference other

attributes in pos. This is used very often to allow accessing a file

body inside a container file when we have some file index data: file

position in container and length:

seq:

- id: file_name

type: str

size: 8 + 3

encoding: ASCII

- id: file_offset

type: u4

- id: file_size

type: u4

instances:

body:

pos: file_offset

size: file_sizeAnother very important difference between the seq attribute and the

instances attribute is that instances are lazy by default. What does

that mean? Unless someone would call that body getter method

programmatically, no actual parsing of body would be done. This is

super useful for parsing larger files, such as images of filesystems. It

is impractical for a filesystem user to load all the filesystem data

into memory at once: one usually finds a file by its name (traversing a

file index somehow), and then can access file’s body right away. If

that’s the first time this file is being accessed, body will be loaded

(and parsed) into RAM. Second and all subsequent times will just return

a cached copy from the RAM, avoiding any unnecessary re-loading /

re-parsing, thus conserving both RAM and CPU time.

Note that from the programming point of view (from the target

programming languages and from internal Kaitai Struct’s expression

language), seq attributes and instances are exactly the same.

4.14. Value instances

Sometimes, it is useful to transform the data (using expression

language) and store it as a named value. There’s another sort of

instances for that — value instances. They’re very

simple to use, there’s only one key in it — value — that specifies an

expression to calculate:

seq:

- id: length_in_feet

type: f8

instances:

length_in_m:

value: length_in_feet * 0.3048Value instances do no actual parsing, and thus do not require a pos

key or a type key (the type will be derived automatically). If you need

to enforce the type of the expression, see typecasting.

4.15. Bit-sized integers

Quite a few protocols and file formats, especially those which aim to conserve space, pack multiple integers into one byte, using integer sizes less than 8 bits. For example, an IPv4 packet starts with a byte that packs both a version number and header length:

76543210 vvvvllll │ │ │ └─ header length └───── version

It’s possible to unpack bit-packed integers using old-school methods with bitwise operations in value instances:

seq:

- id: packed_1

type: u1

instances:

version:

value: (packed_1 & 0b11110000) >> 4

len_header:

value: packed_1 & 0b00001111However, Kaitai Struct offers a better way to do it — using bit-sized integers.

4.15.1. Big-endian order

|

Important

|

Feature available since v0.6. |

Here’s how the above IPv4 example can be parsed with Kaitai Struct:

meta:

bit-endian: be

seq:

- id: version

type: b4

- id: len_header

type: b4Using the meta/bit-endian key, we specify big-endian bit field order

(see Specifying bit endianness for more info). In this mode, Kaitai Struct starts parsing bit

fields from the most significant bit (MSB, 7) to the least significant bit

(LSB, 0). In this case, "version" comes first and "len_header" second.

The bit layout for the above example looks like this:

d[0]

7 6 5 4 3 2 1 0

v3 v2 v1 v0 h3 h2 h1 h0

───────┬────── ───────┬──────

version len_header

d[0] is the first byte of the stream, and the numbers 7-0 on the line

below indicate the invididual bits of this byte (listed from MSB 7 to LSB 0).

The value of version can be retrieved as 0b{v3}{v2}{v1}{v0}

(0b… is the binary integer literal as present in many programming

languages, and {v3} is the value 0 or 1 of the corresponding bit),

and the len_header value can be retrieved as 0b{h3}{h2}{h1}{h0}.

Using type: bX (where X is a number of bits to read) is very

versatile and can be used to read byte-unaligned data. A more complex

example of packing, where value spans two bytes:

d[0] d[1]

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

a4 a3 a2 a1 a0 b8 b7 b6 b5 b4 b3 b2 b1 b0 c1 c0

─────────┬──────── ─────────────────┬────────────────── ───┬──

a b c

│ ───────────────────────────> │ │ ───────────────────────────> │

parsing direction ╷ ↑

└┄┄┘

meta:

bit-endian: be

seq:

- id: a

type: b5

- id: b

type: b9

# 3 bits (b{8-6}) + 6 bits (b{5-0})

- id: c

type: b2|

Note

|

Why is this order of bit field members called "big-endian"? Because

the parsing results are equivalent to first reading a packed

integer in big-endian byte order and then extracting the values

using bitwise operators ( Using the same logic, little-endian bit integers correspond to unpacking a little-endian integer instead. See Little-endian order for more info. |

Or it can be used to parse completely unaligned bit streams with repetitions. In this example, we parse an arbitrary number of 3-bit values:

d[0] d[1] d[2] d[3]

76543210 76543210 76543210 76543210

nnnnnnnn 00011122 23334445 55666777 ...

________ ‾‾‾___‾‾‾‾___‾‾‾____

╷ │ ╷ │ ╷ │ ╷

num_threes ────┘ │ │ │ │ │ │

threes[0] ──────────┘ │ │ │ │ │

threes[1] ─────────────┘ │ │ │ │

threes[2] ────────────────┘ │ │ │

threes[3] ────────────────────┘ │ │

threes[4] ───────────────────────┘ │

threes[5] ───────────────────────────┘

...

meta:

bit-endian: be

seq:

- id: num_threes

type: u1

- id: threes

type: b3

repeat: expr

repeat-expr: num_thress|

Important

|

By default, if you mix "normal" byte-sized integers (i.e. two bytes will get parsed like this: 76543210 76543210

ffffff bbbbbbbb

──┬─── ───┬────

| |

foo ──┘ |

bar ────────────┘

i.e. the two least significant bits of the first byte would be lost and not parsed due to alignment. |

4.15.2. Little-endian order

|

Important

|

Feature available since v0.9. |

Most formats using little-endian byte order with packed multi-byte bit fields (e.g. android_img, rar or swf) assume that such bit fields are unpacked manually using bitwise operators from a little-endian integer parsed in advance containing the whole bit field. The bit layout of the field is designed accordingly.

For example, consider the following bit field:

seq:

- id: packed

type: u2le

instances:

a:

value: (packed & 0b11111000_00000000) >> (3 + 8)

b:

value: (packed & 0b00000111_11111100) >> 2

c:

value: packed & 0b00000000_00000011The expressions for extracting the values look exactly the same as

for the big-endian order, but the actual bit layout

will be different, because here the packed integer is read

in little-endian (LE) byte order.

Given that d is a 2-byte array needed to parse an unsigned 2-byte

integer, the numeric value of a BE integer is

0x{d[0]}{d[1]} (0x… is the hexadecimal integer literal

and {d[0]} is the hex value of byte d[0] as seen in hex dumps,

e.g. 02 or 7f), whereas the value of a LE integer

would be 0x{d[1]}{d[0]}.

It follows that if we read a BE integer from a new byte array

[d[1], d[0]] (i.e. d reversed), we’ll get the same result

as when reading a LE integer from the original d array.

Because we’ve already explained how bit-integers work in

big-endian order, let’s repeat this method for the

above bitfield on the byte array d reversed (d[1] d[0]) and then

swap the bytes back to the original order of d (d[0] d[1]):

d[1] d[0]

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

a4 a3 a2 a1 a0 b8 b7 b6 b5 b4 b3 b2 b1 b0 c1 c0

──────────────┬─────────────── ───────────────┬──────────────

└──────────────╷ ╷───────────────┘

╲ ╱

╳

╱ ╲

┌──────────────╵ ╵──────────────┐

d[0] d[1]

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

b5 b4 b3 b2 b1 b0 c1 c0 a4 a3 a2 a1 a0 b8 b7 b6

──────────┬─────────── ──┬─── ─────────┬──────── ────┬─────

b c a b

│ <──────────────────────────── │ │ <──────────────────────────── │

╷ parsing direction ↑

└┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈>┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┈┘

As you can guess from the bit layout, you can’t use big-endian

bit integers here without splitting the b value into 2 separate

members.

This is because each byte in a big-endian bit field is gradually "filled"

with members from the most significant bit (7) to the least significant (0),

and if the current byte is filled up to LSB, the parsing continues on

MSB of next byte. It follows that b really can’t be represented

with a single attribute using this order, because c and a are standing

in the way.

Little-endian bit fields use the reversed parsing direction: bytes are filled from LSB (0) to MSB (7), and after filling the byte up to MSB, values overflow to the next byte’s LSB.

For example, the above bit layout can be conveniently represented using little-endian bit integers:

meta:

bit-endian: le

seq:

- id: c

type: b2

- id: b

type: b9

# 6 bits (b{5-0}) + 3 bits (b{8-6})

- id: a

type: b5As you can see in the KSY snippet, the bit field members in seq

are listed from the least significant value to the most significant.

If we look at the bit masks of bit field members (which can be

directly used for ANDing & with the 2-byte little-endian unsigned

value), they would be sorted in ascending order (starting with

the least significant value):

c 0b00000000_00000011 b 0b00000111_11111100 a 0b11111000_00000000

This may seem strange at first, but it’s actually natural from the perspective of how little-endian bit fields work, and how they physically store their members.

Thanks to this order, Kaitai Struct doesn’t need to know the byte

size of the whole bitfield in advance (so that its members could

be rearranged at compile-time to match their physical location),

and it can normally parse the attributes on the run.

It follows that little-endian bit-sized integers can be normally

combined with if conditions and repetitions like any other Kaitai Struct type.

4.15.3. Specifying bit endianness

The key meta/bit-endian specifies the default parsing direction

(bit endianness) of bit-sized integers. It can only have the

literal value le or be (run-time switching

is not supported).

|

Important

|

Support for However, if you don’t really need to support pre-0.9 KSC

versions, it’s recommended to state |

Like meta/endian, meta/bit-endian also applies to bX attributes

in the current type and all subtypes, but it can be overridden

using the le/be suffix (bXle/bXbe) for the individual bit

integers. For example:

meta:

bit-endian: le

seq:

- id: foo

type: b2 # little-endian

types:

my_subtype:

seq:

- id: bar

type: b8 # also little-endian

- id: baz

type: b16be # big-endian|

Important

|

Big-endian and little-endian bit integers can follow only on a byte boundary. They can’t share the same byte. Joining them on an unaligned bit position is undefined behavior, and future versions of KSC will throw a compile-time error if they detect such a situation. For example, this is illegal: |

4.16. Documenting your spec

We introduced the doc key early in this user guide as

a simple way to add docstrings to the attributes. However, it’s not

only attributes that can be documented. The same doc key can be used

in several different contexts:

doc: |

Documentation for type. Works for top-level types too, in case you

were wondering.

seq:

- id: attr_1

type: u1

doc: Documentation for sequence attribute.

instances:

attr_2:

pos: 0x1234

type: u1

doc: Documentation for parse instance attribute.

attr_3:

value: attr_2 + 1

doc: Documentation for value instance attribute.

types:

some_type:

doc: Documentation for type as well. Works for inner types too.

params:

- id: param_1

type: u1

doc: |

Documentation for a parameter. Parameters are a relatively

advanced topic, see below for the explanations.4.16.1. doc-ref

The doc key has a "sister" key doc-ref, which can be used to specify

references to original documentation. This is very useful to keep

track of what corresponds to what when transcribing an existing

specification. Everywhere where you can use doc, you can use

doc-ref as well. Depending on the target language, this key would be

rendered as something akin to a "see also" extra paragraph after the

main docstring. For example:

Kaitai Struct

|

Java

|

Inside doc-ref, one can specify:

-

Just a user-readable string. Most widely used to reference offline documentation. User would need to find relevant portion of documentation manually.

doc-ref: ISO-9876 spec, section 1.2.3 -

Just a link. Used when existing documentation has a non-ambiguous, well defined URL that everyone can refer to, and there’s nothing much to add to it.

doc-ref: https://www.youtube.com/watch?v=dQw4w9WgXcQ -

Link + description. Used when adding some extra text information is beneficial: for example, when a URL is not enough and needs some comments on how to find relevant info inside the document, or the document is also accessible through some other means and it’s useful to specify both URL and section numbering for those who won’t be using the URL. In this case,

doc-refis composed of a URL, then a space, then a description.doc-ref: https://tools.ietf.org/html/rfc2795#section-6.1 RFC2795, 6.1 "SIMIAN Client Requests"

4.16.2. -orig-id

When transcribing spec based on some existing implementation, most

likely you won’t be able to keep exact same spelling of all

identifiers. Kaitai Struct imposes pretty draconian rules on what can

be used as id, and there is a good reason for it: different target

languages have different ideas of what constitutes a good identifier,

so Kaitai Struct had to choose some "middle ground" that yields decent

results when converted to all supported languages' standards.

However, in many cases, it might be useful to keep references to how

things were named in original implementation. For that, one can

customarily use -orig-id key:

seq:

- id: len_str_buf

-orig-id: StringBufferSize

type: u4

- id: str_buf

-orig-id: StringDataInputBuffer

size: len_str_buf|

Tip

|

The Kaitai Struct compiler will just ignore any key that starts with

|

4.16.3. Verbose enums

|

Important

|

Feature available since v0.8. |

If you want to add some documentation for enums, this is possible using verbose enums declaration:

enums:

ip_protocol:

1:

id: icmp

doc: Internet Control Message Protocol

doc-ref: https://www.ietf.org/rfc/rfc792

6:

id: tcp

doc: Transmission Control Protocol

doc-ref: https://www.ietf.org/rfc/rfc793

17:

id: udp

doc: User Datagram Protocol

doc-ref: https://www.ietf.org/rfc/rfc768In this format, instead of specifying just the identifier for every

numeric value, you specify a YAML map, which has an id key for

the identifier, and allows other regular keys (like doc and doc-ref)

to specify documentation.

4.17. Meta section

The meta key is used to define a section which stores meta-information

about a given type, i.e. various complimentary stuff, such as titles,

descriptions, pointers to external linked resources, etc:

-

id -

title -

application -

file-extension -

xref— used to specify cross-references -

license -

tags -

ks-version -

ks-debug -

ks-opaque-types -

imports -

encoding -

endian

|

Tip

|

While it’s technically possible to specify meta keys in

arbitary order (as in any other YAML map), please use the order

recommended in the KSY style guide when

authoring .ksy specs for public use to improve readability.

|

4.17.1. Cross-references

meta/xref can be used to provide arbitrary cross-references for a

particular type in other collections, such as references / IDs in

format databases, wikis, encyclopedias, archives, formal standards,

etc. Syntactically, it’s just a place where you can store arbitrary

key-value pairs, e.g.:

meta:

xref:

forensicswiki: portable_network_graphics_(png)

iso: '15948:2004'

justsolve: PNG

loc: fdd000153

mime: image/png

pronom:

- fmt/13

- fmt/12

- fmt/11

rfc: 2083

wikidata: Q178051There are several "well-known" keys used by convention by many spec authors to provide good cross references of their formats:

-

forensicswikispecifies an article name at Forensics Wiki, which is a CC-BY-SA-licensed wiki with information on digital forensics, file formats and tools. A full link could be generated ashttps://forensics.wiki/+ this value +/.For consistency, insert parentheses in this value literally (without using percent-encoding) - e.g. use

extended_file_system_(ext)instead ofextended_file_system_%28ext%29. -

isospecifies an ISO/IEC standard number, a reference to a standard accepted and published by ISO (International Organization for Standardization). Typically these standards are not available for free (i.e. one has to pay to get a copy of a standard from ISO), and it’s non-trivial to link to the ISO standards catalogue. However, ISO standards typically have clear designations like "ISO/IEC 15948:2004", so the value should cite everything except for "ISO/IEC", e.g.15948:2004. -

justsolvespecifies an article name at "Just Solve the File Format Problem" wiki, a wiki that collects information on many file formats. A full link could be generated ashttp://fileformats.archiveteam.org/wiki/+ this value. -

locspecifies an identifier in the Digital Formats database of the US Library of Congress, a major effort to enumerate and document many file formats for digital preservation purposes. The value typically looks likefddXXXXXX, whereXXXXXXis a 6-digit identifier. -

mimespecifies a MIME (Multipurpose Internet Mail Extensions) type, AKA "media type" designation, a string typically used in various Internet protocols to specify format of binary payload. As of 2019, there is a central registry of media types managed by IANA. The value must specify the full MIME type (both parts), e.g.image/png. -

pronomspecifies a format identifier in the PRONOM Technical Registry of the UK National Archives, which is a massive file formats database that catalogues many file formats for digital preservation purposes. The value typically looks likefmt/xxx, wherexxxis a number assigned at PRONOM (this idenitifer is called a "PUID", AKA "PRONOM Unique Identifier" in PRONOM itself). If many different PRONOM formats correspond to a particular spec, specify them as a YAML array (see example above). -

rfcspecifies a reference to RFC, "Request for Comments" documents maintained by ISOC (Internet Society). Despite the confusing name, RFCs are typically treated as global, Internet-wide standards, and, for example, many networking / interoperability protocols are specified in RFCs. The value should be just the raw RFC number, without any prefixes, e.g.1234. -

wikidataspecifies an item name at Wikidata, a global knowledge base. All Wikimedia projects (such as language-specific Wikipedias, Wiktionaries, etc) use Wikidata at least for connecting various translations of encyclopedic articles on a particular subject, so keeping just a link to Wikidata is typically enough to. The value typically follows aQxxxpattern, wherexxxis a number generated by Wikidata, e.g.Q535473.

5. Streams and substreams

Imagine that we’re dealing with a structure of known size. For the sake of simplicity, let’s say that it’s fixed to exactly 20 bytes (but all the following is also true if the size is defined by some arbitrarily complex expression):

types:

person:

seq:

- id: code

type: u4

- id: name

type: str

size: 16When we’re invoking user-defined types, we can do either:

seq:

- id: joe

type: personor:

seq:

- id: joe

type: person

size: 20Note the subtle difference: we’ve skipped the size in the first example

and added it in the second one. From the end-user’s perspective, nothing

has changed. You can still access Joe’s code and name equally well in

both cases:

r.joe().code() // works

r.joe().name() // worksHowever, what gets changed under the hood? It turns out that

specifying size actually brings some new features: if you modify the

person type to be less than 20 bytes long, it still reserves exactly

20 bytes for joe:

seq:

- id: joe # reads from position 0

type: person

size: 20

- id: foo

type: u4 # reads from position 20

types:

person: # although this type is 14 bytes long now

seq:

- id: code

type: u4

- id: name

type: str

size: 10In this example, the extra 6 bytes would just be skipped. Alternatively,

if you make person to be more than 20 bytes long, it will

trigger an end-of-stream exception:

seq:

- id: joe

type: person

size: 20

- id: foo

type: u4

types:

person: # 100 bytes is longer than 20 bytes declared in `size`

seq:

- id: code

type: u4

- id: name # will trigger an exception here

type: str

size: 96How does it work? Let’s take a look under the hood.

Sizeless user type invocation generates the following parsing code:

this.joe = new Person(this._io, this, _root);However, when we declare the size, things get a little bit more

complicated:

this._raw_joe = this._io.readBytes(20);

KaitaiStream _io__raw_joe = new KaitaiStream(_raw_joe);

this.joe = new Person(_io__raw_joe, this, _root);Every class that Kaitai Struct generates carries a concept of a "stream", usually

available as an _io member. This is the default stream it reads from

and writes to. This stream works just as you might expect from a

regular IO stream implementation in a typical language: it

encapsulates reading from files and memory, stores a pointer to its

current position, and allows reading/writing of various primitives.

Declaring a new user-defined type in the middle of the seq attributes

generates a new object (usually via a constructor call), and this object,

in turn, needs its own IO stream. So, what are our options here?

-

In the "sizeless" case, we just pass the current

_ioalong to the new object. This "reuses" the existing stream with all its properties: current pointer position, size, available bytes, etc. -

In the "sized" case, we know the size a priori and want the object we created to be limited within that size. So, instead of passing an existing stream, we create a new substream that will be shorter and will contain the exact number of bytes requested.

Implementations vary from language to language, but, for example, in Java, the following is done:

// First, we read as many bytes as needed from our current IO stream.

// Note that if we don't even have 20 bytes right now, this will throw

// an EOS exception on this line, and the user type won't even be invoked.

this._raw_joe = this._io.readBytes(20);

// Second, we wrap our bytes into a new stream, a substream

KaitaiStream _io__raw_joe = new KaitaiStream(_raw_joe);

// Finally, we pass our substream to the Person class instead of

this.joe = new Person(_io__raw_joe, this, _root);After that, parsing of a person type will be totally bound to the limits

of that particular substream. Nothing in the Person class

can do a thing to the original stream — it just doesn’t have access to

that object.

Let’s check out a few use cases that demonstrate how powerful this practice can be.

5.1. Limiting total size of structure

Quite often binary formats use the following technique:

-

First comes some integer that declares the total size of the structure (or the structure’s body, i.e. everything minus this header).

-

Then comes the structure’s body, which is expected to have exactly the declared number of bytes.

Consider this example:

seq:

- id: body_len

type: u4

# The following must be exactly `body_len` bytes long

- id: uuid

size: 16

- id: name

type: str

size: 24

- id: price

type: u4

# This "comment" entry must fill up all remaining bytes up to the

# total of `body_len`.

- id: comment

size: ???Of course, one can derive this manually:

-

body_len = sizeof(uuid) + sizeof(name) + sizeof(price) + sizeof(comment)

-

body_len = 16 + 24 + 4 + sizeof(comment)

-

sizeof(comment) = body_len - (16 + 24 + 4)

-

sizeof(comment) = body_len - 44

Thus:

- id: comment

size: body_len - 44But this is very inconvenient and potentially error prone. What will happen if at some time in future the record contents are updated and we forget to update this formula?

It turns out that substreams offer a much cleaner solution here. Let’s

separate our "header" and "body" into two distinct user types, and

then we can just specify size on this body:

seq:

- id: body_len

type: u4

- id: body

type: record_body

size: body_len

# ^^ This is where substream magic kicks in

types:

record_body:

seq:

- id: uuid

size: 16

- id: name

type: str

size: 24

- id: price

type: u4

- id: comment

size-eos: trueFor comment, we just made its size to be up until the end of

stream. Given that we’ve limited it to the substream in the first

place, this is exactly what we wanted.

5.2. Repeating until total size reaches limit

The same technique might be useful for repetitions as well. If you have an array of same-type entries, and a format declares the total size of all entries combined, again, you can try to do this:

seq:

- id: total_len

type: u4

- id: entries

type: entry

repeat: expr

repeat-expr: ???And do some derivations to calculate number of entries, i.e. "total_len / sizeof(entry)". But, again, this is bad because:

-

You need to keep remembering to update this "sizeof" value when the entry size updates.

-

If the entry size if not fixed, you’re totally out of luck here.

Solving it using substreams is much more elegant. You just create a

substream limited to total_len bytes, and then use repeat: eos to

repeat until the end of that stream.

|

Caution

|

However, note that one’s naïve approach might not work:

So this is wrong ( For more information, see Keys relating to the whole array and to each element in repeated attributes. The proper solution is to add an extra layer of types: |

5.3. Relative positioning

Another useful feature that’s possible with substreams is the fact that

while you’re in a substream, the pos key works in the context of that

substream as well. That means it addresses data relative to the start of that

substream:

seq:

- id: some_header

size: 20

- id: body

type: block

size: 80

types:

block:

seq:

- id: foo

type: u4

instances:

some_bytes_in_the_middle:

pos: 30

size: 16In this example, body allocates a substream spanning from 20th byte

(inclusive) till 100th byte (exclusive). Then, in that stream:

-

foowould be parsed right from the beginning of that substream, thus taking up bytes[20..24) -

some_bytes_in_the_middlewould start parsing 16 bytes from the 30th byte of that substream, thus parsing bytes[20 + 30 .. 20 + 46)=[50..66)in the main stream.

This comes super handy if your format’s internal structures somehow specify offsets relative to some other structures of the format. For example, a typical filesystem/database often uses a concept of blocks, and offsets that address stuff inside the current block. Note how KSY with substreams is easier to read, more concise and less error-prone:

Bad (w/o substream)

|

Good (w/substream)

|

The more levels of structure offset nesting there are, the more

complicated these pos expressions would get without substreams.

5.4. Absolute positioning

If you ever need to "escape" the limitations of a substream by

wishing to use a pos key of a parse instance to address something absolutely

(i.e. in the main stream), it’s easy to do so by adding an io key to

choose the root’s stream:

seq:

- id: some_header

size: 20

- id: files

size: 80

type: file_entry

repeat: eos

types:

file_entry:

seq:

- id: file_name

type: strz

- id: ofs_body

type: u4

- id: len_body

type: u4

instances:

body:

io: _root._io

pos: ofs_body

size: len_bodyThat’s the typical situation encountered in many file container

formats. Here we have a list of files, and each of its entries has

been limited to exactly 80 bytes. Inside each 80-byte chunk, there’s a

file_name, and, more importantly, a pointer to the absolute location of

the file’s body inside the stream. The body instance allows us to get that

file’s body contents quickly and easily. Note that if there was no

io: _root._io key there, that body would have been parsed inside a

80-byte substream (and most likely that would result in an exception

trying to read outside of the 80 byte limit), and that’s not what we want

here.

5.5. Choosing a substream

The technique above is not limited to just the root object’s stream. You can address any other object’s stream as well, for example:

seq:

- id: global_header

size: 1024

- id: block_one

type: big_container

size: 4096

- id: block_two

type: smaller_container

size: 1024

types:

big_container:

seq:

- id: some_header

size: 8

# the rest of the data in this container would be referenced

# from other blocks

smaller_container:

seq:

- id: ofs_in_big

type: u4

- id: len_in_big

type: u4

instances:

something_in_big:

io: _root.block_one._io

pos: ofs_in_big

size: len_in_big5.6. Processing: dealing with compressed, obfuscated and encrypted data

Some formats obscure the data fully or partially with techniques like compression, obfuscation or encryption. In these cases, incoming data should be pre-processed before actual parsing takes place, or we’ll just end up with garbage getting parsed. All such pre-processing algorithms have one thing in common: they’re done by some function that takes a stream of bytes and returns another stream of bytes (note that the number of incoming and resulting bytes might be different, especially in the case of decompression). While it might be possible to do such transformation in a declarative manner, it is usually impractical to do so.

Kaitai Struct allows you to plug-in some predefined "processing" algorithms to do decompression, de-obfuscation and decryption to get a clear stream, ready to be parsed. Consider parsing a file, in which the main body is obfuscated by applying XOR with 0xaa for every byte:

seq:

- id: body_len

type: u4

- id: body

size: body_len

process: xor(0xaa)

type: some_body_type # defined normally laterNote that:

-

Applying

process: …is available only to raw byte arrays or user types. -

One might use expression language inside

xor(…), thus referencing the XOR obfuscation key read into some other field previously.

6. Expression language

Expression language is a powerful internal tool inside Kaitai Struct. In a nutshell, it is a simple object-oriented, statically-typed language that gets translated/compiled (AKA "transpiled") into any supported target programming language.

The language is designed to follow the principle of least surprise, so it borrows tons of elements from other popular languages, like C, Java, C#, Ruby, Python, JavaScript, Scala, etc.

6.1. Basic data types

Expression language operates on the following primitive data types:

| Type | Attribute specs | Literals |

|---|---|---|

Integers |

|

|

Floating point numbers |

|

|

Booleans |

|

|

Byte arrays |

|

|

Strings |

|

|

Enums |

( |

|

Streams |

N/A |

N/A |

Integers come from uX, sX, bX type specifications in sequence

or instance attributes (i.e. u1, u4le, s8, b3, etc), or can be

specified literally. One can use:

-

decimal form (e.g.

123) -

hexadecimal form using

0xprefix (e.g.0xcafe— both upper case and lower case letters are legal, i.e.0XcAfEor0xCAfewill do as well) -

binary form using

0bprefix (e.g.0b00111011) -

octal form using

0oprefix (e.g.0o755)

It’s possible to use _ as a visual separator in literals — it will

be completely ignored by the parser. This could be useful, for example,

to:

-

visually separate thousands in decimal numbers:

123_456_789 -

show individual bytes/words in hex:

0x1234_5678_abcd -

show nibbles/bytes in binary:

0b1101_0111

Floating point numbers also follow the normal notation used in the vast

majority of languages: 123.456 will work, as well as exponential

notation: 123.456e-55. Use 123.0 to enforce floating point type to

an otherwise integer literal.

Booleans can be specified as literal true and false values as in

most languages, but also can be derived by using type: b1. This

method parses a single bit from a stream and represents it as a

boolean value: 0 becomes false, 1 becomes true. This is very useful to

parse flag bitfields, as you can omit flag_foo != 0 syntax and just

use something more concise, such as is_foo.

Byte arrays are defined in the attribute syntax when you don’t

specify anything as type. The size of a byte array is thus determined

using size, size-eos or terminator, one of which is mandatory in

this case. Byte array literals use typical array syntax like the one

used in Python, Ruby and JavaScript: i.e. [1, 2, 3]. There is a

little catch here: the same syntax is used for "true" arrays of

objects (see below), so if you try to do stuff like [1, 1000, 5]

(1000 obviously won’t fit in a byte), you won’t get a byte array,

you’ll get an array of integers instead.

Strings normally come from using type: str (or type: strz, which

is a shortcut that implicitly adds terminator: 0).

Literal strings can be specified using double quotes or single

quotes. The meaning of single and double quotes is similar to those of

Ruby, PHP and Shell script:

-

Single quoted strings are interpreted literally, i.e. backslash

\, double quotes"and other possible special symbols carry no special meaning, they would be just considered a part of the string. Everything between single quotes is interpreted literally, i.e. there is no way one can include a single quote inside a single quoted string. -

Double quoted strings support escape sequences and thus allow you to specify any characters. The supported escape sequences are as follows:

| Escape seq | Code (dec) | Code (hex) | Meaning |

|---|---|---|---|

|

7 |

0x7 |

bell |

|

8 |

0x8 |

backspace |

|

9 |

0x9 |

horizontal tab |

|

10 |

0xa |

newline |

|

11 |

0xb |

vertical tab |

|

12 |

0xc |

form feed |

|

13 |

0xd |

carriage return |

|

27 |

0x1b |

escape |

|

34 |

0x22 |

double quote |

|

39 |

0x27 |

single quote (technically not required, but supported) |

|

92 |

0x5c |

backslash |

|

ASCII character with octal code 123; one can specify 1..3 octal digits |

||

|

Unicode character with code U+12BF; one must specify exactly 4 hex digits |

|

Note

|

One of the most widely used control characters, ASCII zero

character (code 0) can be specified as \0 — exactly as it works in

most languages.

|

|

Caution

|

Octal notation is prone to errors: due to its flexible

length, it can swallow decimal digits that appear after the code as

part of octal specification. For example, a\0b is three characters:

a, ASCII zero, b. However, 1\02 is interpreted as two

characters: 1 and ASCII code 2, as \02 is interpreted as one octal

escape sequence.

|

TODO: Enums

Streams are internal objects that track the byte stream that we

parse and the state of parsing (i.e. where the pointer is). There is no

way to declare a stream-type attribute directly by parsing

instructions or specify it as a literal. The typical way to get stream

objects is to query the _io attribute from a user-defined object: that

will give us a stream associated with this particular object.

6.2. Composite data types

There are two composite data types in the expression language (i.e. data types which include other types as components).

6.2.1. User-defined types

User-defined types are the types one defines using .ksy syntax —

i.e. the top-level structure and all substructures defined in the types key.

Normally, they are translated into classes (or their closest available equivalent — i.e. a storage structure with members + access members) in the target language.

6.2.2. Arrays

Array types are just what one might expect from an all-purpose, generic

array type. Arrays come from either using the repetition syntax

(repeat: …) in an attribute specification, or by specifying a literal

array. In any case, all Kaitai Struct arrays have an underlying data type that they

store, i.e. one can’t put strings and integers into the same

array. One can do arrays based on any primitive data type or composite

data type.

|

Note

|

"True" array types (described in this section) and "byte arrays" share the same literal syntax and lots of method APIs, but they are actually very different types. This is done on purpose, because many target languages use very different types for byte arrays and arrays of objects for performance reasons. |

One can use array literals syntax to declare an array (very similar to the syntax used in JavaScript, Python and Ruby). Type will be derived automatically based on the types of values inside brackets, for example:

-

[123, 456, -789]— array of integers -

[123.456, 1.234e+78]— array of floats -

["foo", "bar"]— array of strings -

[true, true, false]— array of booleans -

[a0, a1, b0]— given thata0,a1andb0are all the same objects of user-defined typesome_type, this would be array of user-defined typesome_type

|

Warning

|

Mixing multiple different types in a single array literal

would trigger a compile-time error, for example, this is illegal: [1,

"foo"]

|

6.3. Operators

Literals can be connected using operators to make meaningful

expressions. Operators are type-dependent: for example, the +

operator applied to two integers would mean arithmetic addition, while the same operator

applied to two strings would mean string concatenation.

6.3.1. Arithmetic operators

Can be applied to integers and floats:

-

a + b— addition -

a - b— subtraction -

a * b— multiplication -

a / b— division -

a % b— modulo; note that it’s not a remainder:-5 % 3is1, not-2; the result is undefined for negativeb.

|

Note

|

If both operands are integers, the result of an arithmetic operation is

an integer, otherwise it is a floating point number. For example, that

means that 7 / 2 is 3, and 7 / 2.0 is 3.5.

|

Can be applied to strings:

-

a + b— string concatenation

6.3.2. Relational operators

Can be applied to integers, floats and strings:

-

a < b— true ifais strictly less thanb -

a <= b— true ifais less or equal thanb -

a > b— true ifais strictly greater thanb -

a >= b— true ifais greater or equal thanb

Can be applied to integers, floats, strings, booleans and enums (does proper string value comparison):

-

a == b— true ifais equal tob -

a != b— true ifais not equal tob

6.3.3. Bitwise operators

Can only be applied to integers.

-

a << b— left bitwise shift -

a >> b— right bitwise shift -

a & b— bitwise AND -

a | b— bitwise OR -

a ^ b— bitwise XOR

6.3.4. Logical (boolean) operators

Can be only applied to boolean values.

-

not x— boolean NOT -

a and b— boolean AND -

a or b— boolean OR

6.3.5. Ternary (if-then-else) operator

If condition (must be boolean expression) is true, then if_true

value is returned, otherwise if_false value is returned:

condition ? if_true : if_false

// Examples

code == block_type::int32 ? 4 : 8

"It has a header: " + (has_header ? "Yes" : "No")|

Note

|

It is acceptable to mix:

|

|

Caution

|

Using the ternary operator inside a KSY file (which must remain a valid YAML

file) might be tricky, as some YAML parsers do not allow colons ( To ensure maximum compatibility, put quotes around such strings, i.e: |

6.4. Methods

Just about every value in expression language is an object (including

literals), and it’s possible to call methods on it. The common syntax

to use is obj.method(param1, param2, …), which can be abbreviated

to obj.method if no parameters are required.

Note that when the obj in question is a user-defined type, you can

access all its attributes (both sequence and instances) using the same

obj.attr_name syntax. One can chain that to traverse a

chain of substructures: obj.foo.bar.baz (given that obj is a

user-defined type that has a foo field, which points to a user-defined

type that has a bar field, and so on).

There are a few pre-defined methods that form a kind of "standard library" for expression language.

6.4.1. Integers

| Method name | Return type | Description |

|---|---|---|

|

String |

Converts an integer into a string using decimal representation |

6.4.2. Floating point numbers

| Method name | Return type | Description |

|---|---|---|

|

Integer |

Truncates a floating point number to an integer |

6.4.3. Byte arrays

| Method name | Return type | Description |

|---|---|---|

|

Integer |

Number of bytes in the array |

|

String |

Decodes (converts) a byte array encoded using the specified |

6.4.4. Strings

| Method name | Return type | Description |

|---|---|---|

|

Integer |

Length of a string in number of characters |

|

String |

Reversed version of a string |

|

String |

Extracts a portion of a string between character at offset |

|

Integer |

Converts a string in decimal representation to an integer |

|

Integer |

Converts a string with a number stored in |

6.4.5. Enums

| Method name | Return type | Description |

|---|---|---|

|

Integer |

Converts an enum into the corresponding integer representation |

6.4.6. Booleans

| Method name | Return type | Description |

|---|---|---|

|

Integer |

Returns |

6.4.7. User-defined types

All user-defined types can be queried to get attributes (sequence

attributes or instances) by their name. In addition to that, there are

a few pre-defined internal methods (they all start with an underscore

_, so they don’t clash with regular attribute names):

| Method name | Return type | Description |

|---|---|---|

|

User-defined type |

Top-level user-defined structure in current file |

|

User-defined type |

Structure that produced this particular instance of user-defined type |

|

Stream |

Stream associated with this object of user-defined type |

6.4.8. Array types

| Method name | Return type | Description |

|---|---|---|

|

Array base type |

Gets first element of the array |

|

Array base type |

Gets last element of the array |

|

Integer |

Number of elements in the array |

|

Array base type |

Gets the minimum element of the array |

|

Array base type |

Gets the maximum element of the array |

6.4.9. Streams

| Method name | Return type | Description |

|---|---|---|

|

Boolean |

|

|

Integer |

Total size of the stream in bytes |

|

Integer |

Current position in the stream, in bytes from the beginning of the stream |

7. Advanced techniques

7.1. Advanced switching

7.1.1. Switching over strings

One can use type switching over other comparable values,

not just integers. For example, one can switch over a string value. Note

that the left side (key) of a cases map is a full-featured Kaitai Struct expression,

thus all we need is to specify a string. Don’t forget that there’s

still YAML syntax that might get in a way, so we effectively need to

quote strings twice: once for Kaitai Struct expression language, and once in the

YAML representation to save these quotes from being interpreted by

a YAML parser, i.e.:

seq: